Synopsis

Information theory is the science of operations on data such as compression, storage, and communication. The goal of this course is to introduce the principles of information and coding theory. These include a fundamental understanding of data compression and reliable communication over noisy channels. The course introduces the concepts of information measures, entropy, mutual information, and channel capacity, which are the fundamental basis of the mathematical theory of communication.

Contents and Educational Objectives

Short overview of probability theory

- Event probabilities and probability density functions

Lossless compression

- Memoryless sources/stationary sources

- Uniquely decodable codes, prefix-free codes, minimum encoding length

- Huffman codes, Coding theorem for stationary sources

Information Measures

- Entropy, entropy rate, conditional entropy, mutual information

Channel Coding Theorem

- Discrete Memoryless Channels (DMC)

- Channel capacity with/out feedback

- Joint source-channel coding theorem

- Capacity Bounds for Finite-length Codes

Continuous-Valued Channel Coding

- Differential entropy

- Capacity of AWGN channel

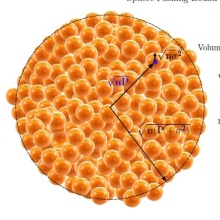

- Sphere packing

Channel Coding in Practice

- Linear Codes and min distance

- Maximum likelihood decoding

- Minimum-distance decoding

Polar Codes: A practical capacity-achieving channel code on DMC

- Construction and Successive cancellation Decoding

- Error Analysis and Polarization Effect

Introduction to Iterative Decoding

- LDPC Codes

- Belief-propagation decoding

Capacity of Band-limited Waveform Channels

- Waterfilling of parallel Gaussian channels

Course Information

6 ECTS Credits

Lectures

| Lecturer | Prof. Dr.-Ing. Stephan ten Brink and Dr.-Ing. Christian Senger |

| Time Slot | Wednesday, 14:00-15:30 |

| Lecture Hall | 2.348 (ETI2) |

| Weekly Credit Hours | 2 |

Exercises

| Lecturer | Jannis Clausius and Daniel Tandler |

| Time Slot | Thursday, 14:00-15:30 |

| Lecture Hall | 2.348 (ETI2) |

| Weekly Credit Hours | 2 |

Stephan ten Brink

Prof. Dr.-Ing.Director of the Institute

Christian Senger

PD Dr.-Ing.Deputy Director